Distributed Web Services

Date of Last Update: 8 October 2025

This is an attempt to provide a high level overview of an active project – the migration of our web services to a distributed architecture (distributed web services). The intended audience is Linux system administrators with basic background in Web Servers (Apache or NGINX), database systems (mySQL, MariaDB, Postgres, etc), and networking fundamentals. As this is a living document, there are apt to be errors from time to time, incomplete information and marginal English usage (copy editing comes later) as I continue to update it. Any and all comments, suggestions, corrections, etc. are most welcome.

Setting up and running low-volume websites is straightforward and easy. For years, we actively managed many such sites in a simple LAMP (Linux, Apache, MySQL, PHP) environment. These sites launch quickly, demand minimal administrative effort, and perform reliably most of the time. We’ve run these sites on various Linux distributions from small SOC based systems though much faster and more modern standard servers (Intel and AMD based). Out of the box configurations combined with our standard templates allowed us to manually get new sites up in 30 minutes or less, including all the related DNS and database configuration and updates.

Setting up and running low-volume websites is straightforward and easy. For years, we actively managed many such sites in a simple LAMP (Linux, Apache, MySQL, PHP) environment. These sites launch quickly, demand minimal administrative effort, and perform reliably most of the time. We’ve run these sites on various Linux distributions from small SOC based systems though much faster and more modern standard servers (Intel and AMD based). Out of the box configurations combined with our standard templates allowed us to manually get new sites up in 30 minutes or less, including all the related DNS and database configuration and updates.

Despite the simple setup and administration of these simple sites there are many drawbacks. Some of these include:

- Scalability: as site usage increases it is not easy to scale server capabilities. Faster hardware alone is not a long term solution.

- Resilience and Reliability: problems with the single server can take a site completely offline. System updates and maintenance take the service offline. Impossible to get 100% uptime. Distributed systems remove the single point of failure problem and spread risk and processing across many potential redundant resources.

- Security: network and application firewalls help however the single server approach forces the web server to be directly visible to the Internet increasing the number of attack vectors.

- Performance: many functions contribute to server loading including backend compute requirements, static resource delivery, SSL/TLS termination and others. Single machine solutions do not offer the ability to spread these functions across available resources.

The Path Towards A Distributed Architecture

Our gradual shift to a distributed architecture proved more complex than we initially anticipated. We needed to actively learn and master many new technologies, each with its own learning curve, at least at a basic level. While many of these technologies (see below) offered excellent documentation, their relationships and interactions with each other often lacked clear documentation or understanding. In retrospect, I should have invested more time researching textbooks on distributed system design, particularly for web applications, which might have helped. If you know of any, please recommend them, and I’ll grab a copy or three for my reading list. In theory, this process shouldn’t have been so challenging, as none of the technologies are particularly new, and distributed web architectures are now fairly common. Most web and cloud providers actively use most, if not all, of the components described here.

Our gradual shift to a distributed architecture proved more complex than we initially anticipated. We needed to actively learn and master many new technologies, each with its own learning curve, at least at a basic level. While many of these technologies (see below) offered excellent documentation, their relationships and interactions with each other often lacked clear documentation or understanding. In retrospect, I should have invested more time researching textbooks on distributed system design, particularly for web applications, which might have helped. If you know of any, please recommend them, and I’ll grab a copy or three for my reading list. In theory, this process shouldn’t have been so challenging, as none of the technologies are particularly new, and distributed web architectures are now fairly common. Most web and cloud providers actively use most, if not all, of the components described here.

A few of the recommended textbooks on distributed system design include:

- Designing Data-Intensive Applications by Martin Kleppmann – A comprehensive guide to building scalable, reliable, and maintainable systems, covering distributed systems and web architectures.

- Distributed Systems: Principles and Paradigms by Andrew S. Tanenbaum and Maarten Van Steen – A foundational text on distributed systems, with clear explanations of concepts and practical applications.

- Building Microservices by Sam Newman – Focused on web distributed design, this book explores microservices architectures and their implementation in modern web systems.

A basic tenant of good system design is to design, build, and test on an incremental basis changing as few variables as possible at each step. We did this as much as possible, but as we progressed found that there were times where the only way forward was to integrate several technologies (new to us) together at the same time and live with the resulting confusion when things did not go according to plan. The rest of this document will first review the basic technologies and some corresponding terminology. The next section outlines our incremental approach to a final (or reasonably close) solution or set of solutions.

A basic tenant of good system design is to design, build, and test on an incremental basis changing as few variables as possible at each step. We did this as much as possible, but as we progressed found that there were times where the only way forward was to integrate several technologies (new to us) together at the same time and live with the resulting confusion when things did not go according to plan. The rest of this document will first review the basic technologies and some corresponding terminology. The next section outlines our incremental approach to a final (or reasonably close) solution or set of solutions.

Technologies

Our historic web services operated self-contained on a single server. These included the web server, web protocol support, database, and application programming language (PHP or Python for most of our sites). All external network communication came in via either port 80 or 443 for the most part. The web server and application server were one and the same and everything mostly worked out of the box. Life was simple and good. As long as you did not try to push the systems very hard or scale up.

Distributed web services solve the problems listed above, and very nicely. But at a cost – complexity. Actually the resulting system is not that complex or hard to get ones head around. The main challenge through this process we had was in trying to get through several initial learning curves at the same time and then integrate all this new knowledge together at the same time. The good news is that the basic building blocks are services that many administrators have experience with in one form or another.

Distributed web services solve the problems listed above, and very nicely. But at a cost – complexity. Actually the resulting system is not that complex or hard to get ones head around. The main challenge through this process we had was in trying to get through several initial learning curves at the same time and then integrate all this new knowledge together at the same time. The good news is that the basic building blocks are services that many administrators have experience with in one form or another.

We still use Apache as one of our building blocks and MariaDB (mySQL alternative) for database service, and the latest version of PHP to run the application engine.

We now break the web application down into simpler components. These components run as separate components that communicate across the local network. Databases and files need to be accessible across a cluster and consistent with each other at all times. The PHP provision has now become a network service, with better threading and performance.

Web Servers

We use a traditional two layer model for web services. The outer or perimeter layer faces the Internet and is responsible for communicating with the user, SSL/TLS termination, routing application requests to the inside network, and in some cases static file provision. We’ve also done HTTP protocol conversion in test setups however probably will not do much, if any, of this in production. Servers at this layer are subject to attack from the Internet. Our USF firewall configuration provides some protection here but the isolation of our web application servers provides a needed added layer of protection.

We use a traditional two layer model for web services. The outer or perimeter layer faces the Internet and is responsible for communicating with the user, SSL/TLS termination, routing application requests to the inside network, and in some cases static file provision. We’ve also done HTTP protocol conversion in test setups however probably will not do much, if any, of this in production. Servers at this layer are subject to attack from the Internet. Our USF firewall configuration provides some protection here but the isolation of our web application servers provides a needed added layer of protection.

The inside layer or network hosts the web application servers. These servers communicate solely with the perimeter layer servers (we’ll introduce more fitting names below) and not directly with users. These compute servers handle communication with the underlying database and generate dynamic web content. This division of responsibilities strengthens web security, enables incremental service scaling, and targets areas needing the most resources.

The inside layer or network hosts the web application servers. These servers communicate solely with the perimeter layer servers (we’ll introduce more fitting names below) and not directly with users. These compute servers handle communication with the underlying database and generate dynamic web content. This division of responsibilities strengthens web security, enables incremental service scaling, and targets areas needing the most resources.

For our network we use a combination of Apache and NGINX web servers. Some recent estimates indicate that both Apache and NGINX each have between 35% and 40% market share with NGINX powered sites increasing fastest. Combined these two sets of technologies power approximately 75% of the Internet. Either solution works for front-end or back-end applications, but Apache typically serves as an application server on the inside network, while NGINX thrives in front-end or communication-centric applications.

For our network we use a combination of Apache and NGINX web servers. Some recent estimates indicate that both Apache and NGINX each have between 35% and 40% market share with NGINX powered sites increasing fastest. Combined these two sets of technologies power approximately 75% of the Internet. Either solution works for front-end or back-end applications, but Apache typically serves as an application server on the inside network, while NGINX thrives in front-end or communication-centric applications.

Origin Servers

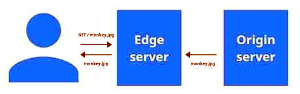

Origin servers in our terminology are the backend web application servers on the inside network. These Apache servers run replicated versions of the web applications we provide. A more complete definition (from Grok):

Origin servers in our terminology are the backend web application servers on the inside network. These Apache servers run replicated versions of the web applications we provide. A more complete definition (from Grok):

An origin server is the primary server that hosts the original content or resources requested by a client, such as a web browser or application. It’s where the website’s files, data, or application logic reside, serving as the authoritative source for delivering content like web pages, images, or APIs directly to users or through intermediaries like CDNs (Content Delivery Networks).

For example, when you visit a website, the origin server processes your request, retrieves the necessary data (e.g., HTML, images, or database content), and sends it back to your browser. In setups using CDNs, the origin server provides the original content to the CDN, which caches it to serve users faster from edge locations.

With the exception of static file content (images, documents, CSS, JavaScript, and other non-changing content) this is the definition we use. In our case some static content may actually be provided by the frontend servers (more on that later).

Reverse Proxy and Load Balancing

A reverse proxy server is a web server that is positioned between the user browser and an origin server. In our terminology this would be the server that runs on the frontend and faces the Internet (our outer layer). A more complete definition (from Grok):

A reverse proxy server is a web server that is positioned between the user browser and an origin server. In our terminology this would be the server that runs on the frontend and faces the Internet (our outer layer). A more complete definition (from Grok):

- Load balancing: Distributing traffic across multiple backend servers to improve performance and reliability.

- Security: Hiding server details, protecting against attacks, and handling SSL/TLS encryption.

- Caching: Storing frequently requested content to reduce server load and speed up responses.

- Request routing: Directing requests to specific servers based on URL, headers, or other criteria.

- Compression: Reducing data size to improve transfer speed.

These servers focus on communication with the user’s browsers on the Internet side and act as a transparent go-between with origin servers. We run NGINX servers as reverse proxies which provide all of the above functionality. Proxies directly serve static file content, further reducing the origin server’s load.

NGINX reverse proxies transparently handle load balancing across multiple origin servers. In our network we also use redundant NGINX reverse proxy servers which rely upon DNS load balancing to spread load between themselves.

Hypertext Transfer Protocol (HTTP)

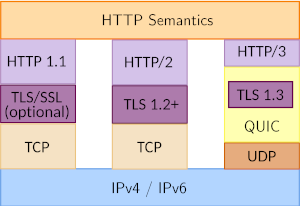

The HyperText Transfer Protocol (HTTP) is a set of standards that define how web browsers and servers communicate with each other. Many versions of HTTP have existed starting with version 0.9 in 1989. Three versions of HTTP remain in widespread use across the Internet: HTTP/1.1, HTTP/2 (h2), and HTTP/3 (h3). Statistics on protocol adoption percentages differ across studies, but just over half of global sites still rely on HTTP/1.1. HTTP/2 and HTTP/3 share the remaining usage, with some studies favoring one protocol and others favoring the opposite. It is safe to assume that over time the use of HTTP/1.1 will likely continue to decline in favor of h2 and h3.

The HyperText Transfer Protocol (HTTP) is a set of standards that define how web browsers and servers communicate with each other. Many versions of HTTP have existed starting with version 0.9 in 1989. Three versions of HTTP remain in widespread use across the Internet: HTTP/1.1, HTTP/2 (h2), and HTTP/3 (h3). Statistics on protocol adoption percentages differ across studies, but just over half of global sites still rely on HTTP/1.1. HTTP/2 and HTTP/3 share the remaining usage, with some studies favoring one protocol and others favoring the opposite. It is safe to assume that over time the use of HTTP/1.1 will likely continue to decline in favor of h2 and h3.

HTTP/1.1

Since its introduction in 1997, HTTP/1.1 has become the de facto standard for communication across the world wide web. Sitting on top of TCP in the protocol stack, it is a reasonably simple application layer protocol. Version 1.1 introduced pipelining which allows communicating hosts to re-use an already established TCP connection. This reduces connection establishment overhead and makes for much faster connections. It also introduces other inefficiencies into the stream. Version 1.1 introduced the host identification in the HTTP header, making it possible to easily support virtual hosts (spanning domains) on a single web server.

Many, if not most, simple sites across the Internet continue to rely upon version 1.1. Self hosted sites running a default Apache configuration (still the norm for this segment) come with full HTTP/1.1 support. Bringing up and configuring sites is quick and simple. All web browsers understand HTTP/1.1 so there are no interoperability issues at the protocol level.

HTTP/2 (h2)

HTTP/2 (frequently referred to simply as h2) was first introduced in 2015 and is a significant extension of the previous 1.1 standard. Like HTTP/1.1 it sits on top of TCP in the protocol stack. It shares many of the basic header semantics allowing for backward compatibility. It aimed to improve website loading times, leveraging features like multiplexed streams (within the existing TCP connection), server push, header compression, and binary data support. The adding of stream multiplexing at the application layer solved one of the biggest bottlenecks of HTTP/1.1, the Head of Line Blocking that happens when one large data element blocks the rest of the page delivery. Compression and native binary format support within the stream result in large bandwidth savings, resulting in much faster page download times.

HTTP/2 (frequently referred to simply as h2) was first introduced in 2015 and is a significant extension of the previous 1.1 standard. Like HTTP/1.1 it sits on top of TCP in the protocol stack. It shares many of the basic header semantics allowing for backward compatibility. It aimed to improve website loading times, leveraging features like multiplexed streams (within the existing TCP connection), server push, header compression, and binary data support. The adding of stream multiplexing at the application layer solved one of the biggest bottlenecks of HTTP/1.1, the Head of Line Blocking that happens when one large data element blocks the rest of the page delivery. Compression and native binary format support within the stream result in large bandwidth savings, resulting in much faster page download times.

There is of course a lot more to h2 than the quick summary above, but is outside the scope of this document. The takeaway is that moving from HTTP/1.1 to HTTP/2 results in very significant performance gains for most modern sites. We certainly saw this in our testing and comparisons. Best of all at the web server level (either Apache or NGINX), the adding of h2 support is trivial. These days all modern browsers (desktop and mobile) support h2 so there are no known interoperability problems. Given the simplicity of adopting h2 support at the server level, HTTP/1.1 will soon phase out (our opinion). Our new and migrating sites now support h2 as the standard, with the option to revert to HTTP/1.1 if necessary.

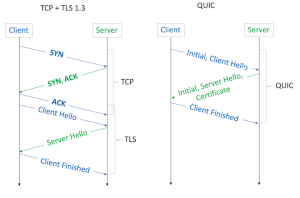

HTTP/3 (h3)

The latest major update to HTTP, HTTP/3 (or just h3) was released as a proposed standard by the IETF in June, 2022. Unlike previous versions of HTTP, h3 does not sit on top of TCP in the protocol stack but instead communicates with the lower layers through UDP. Congestion control, packet assembly, and transport layer security (TLS 1.3) are provided at the application layer. QUIC (Quick UDP Internet Connections), a purpose built transport protocol, runs in user space and sits between UDP on one side and TLS on the other in the protocol stack. The integration of TLS provides additional efficiencies not possible otherwise, in particular with connection establishment. QUIC also solves the h2 head of line blocking within TCP. Similar to how h2 added multiplexing at the application protocol level, h3 does the same at the transport layer.

The latest major update to HTTP, HTTP/3 (or just h3) was released as a proposed standard by the IETF in June, 2022. Unlike previous versions of HTTP, h3 does not sit on top of TCP in the protocol stack but instead communicates with the lower layers through UDP. Congestion control, packet assembly, and transport layer security (TLS 1.3) are provided at the application layer. QUIC (Quick UDP Internet Connections), a purpose built transport protocol, runs in user space and sits between UDP on one side and TLS on the other in the protocol stack. The integration of TLS provides additional efficiencies not possible otherwise, in particular with connection establishment. QUIC also solves the h2 head of line blocking within TCP. Similar to how h2 added multiplexing at the application protocol level, h3 does the same at the transport layer.

HTTP/3 is a bit more efficient with network resources resulting in slight better performance. It is also better with mobile devices as they move between networks (cellular or wi-fi).

One problem that we did find with HTTP/3 has to do with being able to support multiple virtual hosts on a single IP address (name based virtual hosts). With HTTP/1.1 and HTTP/2 this is simple in the server (either Apache or NGINX). It does not seem possible (at least at this time) to do this with h3 on a single IP address in either Apache or NGINX. IP based virtual hosts work fine, but depending on available IP address resources this could be problematic. Servers must operate as multihomed, actively listening on multiple IP addresses, which increases their complexity, administration, and setup time.

Our limited testing of h2 vs. h3 did not uncover any significant differences in performance. With that said the testing was very informal, and we did not do any connection re-establishment (cellular or wi-fi) comparisons which should have worked better with h3. Our testing was with various desktop browsers (Chrome, Edge, Firefox and their Android equivalents). Connections were both via the normal Internet as well as through a dedicated WireGuard tunnel we have with the data center.

Our limited testing of h2 vs. h3 did not uncover any significant differences in performance. With that said the testing was very informal, and we did not do any connection re-establishment (cellular or wi-fi) comparisons which should have worked better with h3. Our testing was with various desktop browsers (Chrome, Edge, Firefox and their Android equivalents). Connections were both via the normal Internet as well as through a dedicated WireGuard tunnel we have with the data center.

With both h2 and h3 testing of the reverse proxy we had consistent problems with NGINX overwhelming the Apache origin servers on pages with high file inclusion counts (common with some WordPress pages). We were able to mitigate many of these through NGINX HTTP header directives but not all. Later in our testing when we were able to access local GlusterFS files (see below), we saw a huge drop in origin server loading, a corresponding increase in performance and page responsiveness, and increased reliability.

As an aside it became clear that there is a lot that happens between the reverse proxy and the origin server and that HTTP headers serve a critical role here. My focus was in getting the network up and running rather than digging into the guts of the protocol and the resulting interactions so I did not pursue this as I would otherwise would have liked. If anyone knows of any good reference material in this area please let me know. Never a shortage of new stuff to read and learn it seems.

HTTP Secure (HTTPS)

HTTPS is the secure version of HTTP. It adds encryption to over the wire data sent between web servers and their clients (usually browsers). This stops people or actors snooping on the network or intermediate networks (man-in-the-middle attacks) from easily viewing web data. This is critically important for transmission of sensitive data such as financial, passwords, health care and other private information.

HTTPS is the secure version of HTTP. It adds encryption to over the wire data sent between web servers and their clients (usually browsers). This stops people or actors snooping on the network or intermediate networks (man-in-the-middle attacks) from easily viewing web data. This is critically important for transmission of sensitive data such as financial, passwords, health care and other private information.

HTTPS uses an encryption protocol, Transport Layer Security (TLS) (formerly known as Secure Sockets Layer, SSL) to secure communication. TLS uses public key encryption to create unique keys for both sides of the connection to use for encryption and decryption of the session data. Websites that support HTTPS need to have a valid public certificate issued by a trusted certificate authority. In the early days of HTTPS obtaining a valid certificate was a fair amount of work, and yearly fees added to the cost of maintaining the domain. Valid, free, and easily maintainable certificates are available these days, removing cost as a barrier.

HTTP/1.1 allowed for the optional configuration of a security layer to implement HTTPS. HTTP/2 and HTTP/3 made HTTPS required. In our configurations we still listen on HTTP port 80 for incoming requests, but return 301 (HTTP redirect) responses to tell browsers to try again using HTTPS. The redirects are usually immediate with users seldom noticing.

Let’s Encrypt

Let’s Encrypt is a non-profit certificate authority run by the Internet Security Research Group (ISRG). It is the worlds largest certificate authority used by more than 600 million websites. We’ve been using their certificates for almost as long as the organization has been around (approximately 10 years at the time of this writing) and have found the tools and certificates easy to use and reliable.

is a non-profit certificate authority run by the Internet Security Research Group (ISRG). It is the worlds largest certificate authority used by more than 600 million websites. We’ve been using their certificates for almost as long as the organization has been around (approximately 10 years at the time of this writing) and have found the tools and certificates easy to use and reliable.

Both Apache and NGINX web servers include built-in support, and the certbot utility automatically updates their configurations when creating or renewing certificates.

With certificates being so simple to install and maintain, and at no cost, there really is no reason for any site not to be running HTTPS anymore.

PHP

PHP is a server side scripting language mainly used for web development. It is platform independent, integrates easily with backend database systems, easily understood and has a huge install base. Most popular content management systems, including WordPress and Drupal run PHP. It is not one of my personal favorite languages as new major releases can have backward compatibility issues resulting in broken code. But my preferences aside, it is the engine that drives most of our sites so making sure we have proper tuning and integration into our infrastructure is critical.

is a server side scripting language mainly used for web development. It is platform independent, integrates easily with backend database systems, easily understood and has a huge install base. Most popular content management systems, including WordPress and Drupal run PHP. It is not one of my personal favorite languages as new major releases can have backward compatibility issues resulting in broken code. But my preferences aside, it is the engine that drives most of our sites so making sure we have proper tuning and integration into our infrastructure is critical.

Note on PHP / Apache Upgrading: We’ve encountered more issues when upgrading PHP and Apache than perhaps any other package or combination. At times it is related to PHP and others attributable to Apache. On the PHP side, we’re currently running version 8.4 at the time of this writing. Since PHP 7, we’ve faced no language-specific problems, though some likely exist. Prior to that time each major upgrade was a nightmare trying to keep web applications up with minimal downtime. The moral of the story here – PHP upgrades seems to be more reliable than before, but it is best to check and double check everything even when doing incremental upgrades (say from 8.2 to 8.3 as an example).

Apache upgrades typically proceed smoothly, except for issues with PHP modules. The Apache PHP modules connect PHP to Apache. Each PHP sub-release corresponds to a new Apache PHP module release. However, in our Ubuntu setup, the Apache upgrade fails to update the mapping to the new PHP module version. Simultaneously, the system often removes the old module version, causing runtime errors. To resolve this, use the a2dismod and a2enmod utilities to remove the previous PHP modules and enable the new ones. This process discards any changes to the module or PHP configuration, requiring manual checks and adjustments to the configuration files to meet your site’s needs. After applying these fixes, the system functions properly. However, this complicates the upgrade process, increases time requirements, and creates opportunities for errors in the system. OK, I’ll end my rant now.

In our distributed architecture, we run PHP code only on the origin servers. These all run Apache 2. There is no need for PHP configuration on any of our reverse proxy NGINX machines. Proper installation and configuration under Apache for the origin servers is however critical.

A complete rundown on PHP configuration is outside the scope of this document and not covered here. Many aspects of PHP allow adjustment and tuning—enough to fill an entire book on PHP tuning. There are however two aspects of PHP that deserve our attention. These are the Apache MultiProcess Modules (MPM) and the PHP FastCGI Process Manager (PHP-FPM, discussed below).

Apache’s Multi-Processing Modules (MPMs) are components (Apache modules) that determine how the server handles multiple requests, managing processes and threads for concurrent connections. They are:

- Prefork: Creates a separate process for each connection. Each process handles one request at a time. Non-threaded and uses more memory than other options however is non-thread safe. Suitable for high-isolation needs.

- Worker: Uses a hybrid process-thread model. A fixed number of processes spawn multiple threads, each handling a connection. It’s more memory-efficient than Prefork and better for handling higher concurrency.

- Event: An optimized version of Worker, designed for keep-alive connections. It uses a dedicated thread to manage idle connections, freeing worker threads for active requests. Best for high-traffic scenarios with many persistent connections.

The choice of what MPM module to configure is highly dependent on both the expected workload mix as well as available server resources. For our network we use the mpm_event module configured to match our available server resources.

PHP-FPM

Standard out of the box Apache and PHP installations integrate PHP into Apache. The Apache server directly runs the PHP code. This setup has worked well for many years and continues to do so for low to medium volume sites. It does not always scale well though and there is no way to separate the PHP processing from the web service.

PHP FastCGI Process Manager (PHP-FPM) is a separate service that runs on the same machine as the web server, or optionally on its own platform. It provides better scalability and performance over the standard PHP configuration and supports higher traffic loads. This of course comes at a price – complexity. But for high volume sites where performance is key, it is the logical choice.

There is a lot to know about PHP-FPM configuration and testing, which I am not going to get into detail with here. Tuning of PHP-FPM, much like MPM-Event above involves matching the server configuration to expected types of use and server resources available. It is best to configure MPM-Event and PHP-FPM together on machines they co-exist on (the most common configuration). Both make assumptions on what kind of server resource limits to apply, in particular available memory. One cannot configure each of them based on total system memory for instance or else when both are in play (most of the time) it will be trivial to run out of memory. Properly configured systems however result in very fast web services that operate within the physical limits of the server they are running on.

Database Systems

Database Systems are software that efficiently manage, store, and retrieve data in a structured or organized way. They include a database (the data itself, structured in tables or other formats) and a database management system (DBMS), which handles tasks like querying, updating, and securing the data. Examples of popular solutions include MySQL, MariaDB, PostgreSQL, and MongoDB. As most of our dynamic websites are WordPress based we focus on MySQL type of databases, which include MariaDB. MariaDB generally offers a slightly more modern alternative to legacy MySQL, delivering marginally better performance and maintaining API and protocol compatibility. We have from time to time used PostgreSQL when an application we need to field requires it.

Database Systems are software that efficiently manage, store, and retrieve data in a structured or organized way. They include a database (the data itself, structured in tables or other formats) and a database management system (DBMS), which handles tasks like querying, updating, and securing the data. Examples of popular solutions include MySQL, MariaDB, PostgreSQL, and MongoDB. As most of our dynamic websites are WordPress based we focus on MySQL type of databases, which include MariaDB. MariaDB generally offers a slightly more modern alternative to legacy MySQL, delivering marginally better performance and maintaining API and protocol compatibility. We have from time to time used PostgreSQL when an application we need to field requires it.

MySQL and MariaDB

In the context of WordPress, MySQL and MariaDB are largely interchangeable. I’m sure not everyone will agree completely with that statement, but from the application perspective they are largely equivalent, even if the underlying storage mechanisms are not always the same. We’ve been using MariaDB as our standard solution for years now without incident. We automatically back up and archive databases in our backup systems on a daily basis. Both MySQL and MariaDB are single host database solutions by design.

Galera Cluster

One of the main design goals of distributed web services is the ability to scale as user demand increases. Vertical scaling, the addition of more RAM, CPU and other single host resources, is one way to do this, but only to a point. Vertical scaling reaches its upper bound quickly, even with the fastest servers. Horizontal scaling, the incremental addition of new servers providing redundant services, scale well. One main challenge though is that all redundant services need access to a consistent and stable version of the data. This includes database and flat file access.

One of the main design goals of distributed web services is the ability to scale as user demand increases. Vertical scaling, the addition of more RAM, CPU and other single host resources, is one way to do this, but only to a point. Vertical scaling reaches its upper bound quickly, even with the fastest servers. Horizontal scaling, the incremental addition of new servers providing redundant services, scale well. One main challenge though is that all redundant services need access to a consistent and stable version of the data. This includes database and flat file access.

There are two ways to solve this problem. The first is to install a fast networked database server which all redundant origin servers access. At some point this approach reaches the same type of upper bound that other vertically scaled applications suffer. As usage increases, response time can suffer, slowing down all origin servers. Depending on the data, it can grow to consume a non-trivial amount of LAN bandwidth. We run a small network with a gigabyte backbone. Perhaps when we get to the point where we are running 10G or faster internal interconnects (on their own internal networks) we can revisit this approach for some applications. The final nail in the coffin for a single server solution is that it represents a single point of failure. If the sole database server were to fail, all connecting origin servers would also fail.

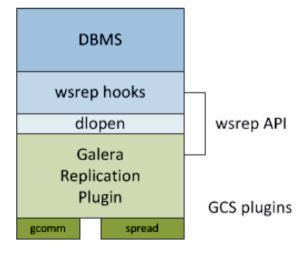

Our solution implements a cluster database solution. We use the Galera Cluster, which provides a distributed wrapper around MariaDB. All database content resides locally, which speeds up access times and conserves LAN bandwidth. A more complete technical summary (courtesy of Grok):

Galera Cluster is an open-source, synchronous multi-master replication solution for MariaDB databases. It enables high availability and scalability by allowing multiple database nodes to handle both read and write operations simultaneously, with data consistency maintained across all nodes. Each node holds a complete database copy, and the group communication system, using the wsrep (Write Set Replication) API, replicates changes nearly in real-time.

Key Features:

- Synchronous Replication: Ensures data consistency by committing transactions across all nodes simultaneously.

- Multi-Master Topology: All nodes can accept read and write queries, eliminating single points of failure.

- Automatic Node Provisioning: New nodes can join the cluster and sync data automatically via State Snapshot Transfer (SST) or Incremental State Transfer (IST).

- High Availability: If one node fails, others continue to operate, providing fault tolerance.

- Scalability: Supports scaling out by adding more nodes to handle increased traffic.

- Conflict Resolution: Uses a certification-based replication mechanism to detect and resolve conflicts (e.g., concurrent writes to the same data).

Distributed File Systems

Database systems as described above are used for the storage and maintenance of structured information (table based). The information needs to be consistent at all times across redundant origin servers. Web servers also provide access to static plain files (css, js, html, jpg, gif, png, pdf and others), critical components of most web pages. These content types typically do not change over time and their storage in a DBMS while technically possible would be wasteful and inefficient. With that said, the rest of the design requirements for distributed databases also apply to static file provision.

Database systems as described above are used for the storage and maintenance of structured information (table based). The information needs to be consistent at all times across redundant origin servers. Web servers also provide access to static plain files (css, js, html, jpg, gif, png, pdf and others), critical components of most web pages. These content types typically do not change over time and their storage in a DBMS while technically possible would be wasteful and inefficient. With that said, the rest of the design requirements for distributed databases also apply to static file provision.

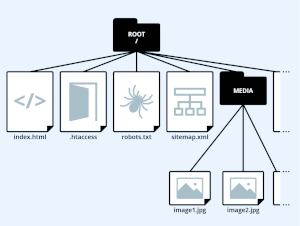

Distributed file systems, like traditional file systems, offer a hierarchical structure that stores files of any type and, for most applications, any size. From an application’s perspective, they function like traditional file systems, but they actively replicate files across multiple machines transparently. A more detailed definition (courtesy of Grok):

A distributed file system (DFS) allows data to be stored, accessed, and managed across multiple servers or nodes in a network, appearing as a single cohesive file system to users. It enables seamless file sharing, scalability, and fault tolerance by distributing data and processing across geographically dispersed or networked machines.

Key Features:

- Distributed Storage: Multiple nodes store files and fragments, often with replication for redundancy.

- Transparency: Users access files as if they were on a local system, unaware of the underlying distribution.

- Scalability: Can handle large amounts of data and users by adding more nodes.

- Fault Tolerance: Data replication and redundancy ensure availability even if some nodes fail.

- Concurrency: Supports multiple users accessing and modifying files simultaneously with consistency mechanisms.

There are many DFS solutions available, each with their specific use cases and advantages/disadvantages. Some systems actively operate across high-latency WAN connections, while others thrive in high-performance LAN/VLAN environments. Our focus was high speed, low latency networks and high performance. We evaluated many and our decision came down to Gluster File System and the Ceph File System. Through the research we did it appears that Ceph is the better performing of the two, however is more complex to setup and has a corresponding higher learning curve. As the performance differences for our use case were not huge, we decided to go with the Gluster File System. As we build out our network(s) over time we might want to revisit this issue.

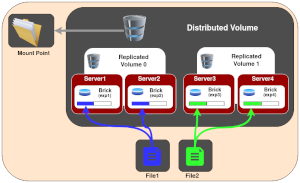

Gluster File System

Gluster File System (GlusterFS) is an open source distributed file system that meets all the key features listed above. It works best in a LAN/VLAN environment where fast communication between cluster nodes is possible. Our GlusterFS volumes are mounted on all origin and reverse proxy servers in a uniform and consistent manner. For most of these servers, the GlusterFS node runs on the same machine as the web server.

Gluster File System (GlusterFS) is an open source distributed file system that meets all the key features listed above. It works best in a LAN/VLAN environment where fast communication between cluster nodes is possible. Our GlusterFS volumes are mounted on all origin and reverse proxy servers in a uniform and consistent manner. For most of these servers, the GlusterFS node runs on the same machine as the web server.

To help with the split brain issue common to many distributed systems, we run an odd number of cluster nodes. This way, should a break in the network or loss of a single machine occur, we can reliably bring the cluster back. For our current network, this means that one web server will not be using a co-resident GlusterFS volume, but instead mount the volume from one of the other servers. In our case we do this on our backup or secondary reverse proxy / load balancer system.

Integrating GlusterFS content is pretty straightforward. Understanding the memory hierarchy and relative access speeds is the key. For our hosted sites the focus is on WordPress performance and acceleration. The Performance Considerations section below discusses performance and acceleration issues.

Two concepts are critical for our implementation – the creation of a uniform file and volume naming convention across the cluster, and the use of symbolic links to connect everything together. The web servers (both origin and reverse proxy) need a simple and consistent view of the file system hierarchy. The naming convention must be constant so that symbolic links on one system work across nodes. All files needed by the server reside in this local (to the cluster) name space. It includes runtime files like the PHP code and other PHP site-specific configuration information. User contributed content like images, documents and other content also reside in this space. How we split files across GlusterFS and traditional file systems determines both how effective the resulting system will be and has huge performance implications.

Performance Considerations

GlusterFS performance analysis involved testing several network and application configurations. We first deployed a three node cluster with two nodes on our HK network and one on our PH network, communicating via a WireGuard tunnel. As suggested by the GlusterFS research we did, performance was poor. It appears that any file system operation on any of the nodes results in real time communication with all other nodes before the commit can take place. The speed and latency issues across the tunnel, while fine for normal SSH or web access, slowed the file system to a crawl making it unusable.

GlusterFS performance analysis involved testing several network and application configurations. We first deployed a three node cluster with two nodes on our HK network and one on our PH network, communicating via a WireGuard tunnel. As suggested by the GlusterFS research we did, performance was poor. It appears that any file system operation on any of the nodes results in real time communication with all other nodes before the commit can take place. The speed and latency issues across the tunnel, while fine for normal SSH or web access, slowed the file system to a crawl making it unusable.

We then built a new cluster residing only on the HK network with testing of full website HTDOCS residing on GlusterFS. The cluster worked but seriously degraded the speed of the web service. We lost all the gains seen with a split service. The reason is simple – the origin server needs to have FAST access to all programs and scripts (PHP in the case of WordPress). WordPress pages can easily access 20 or more PHP code files per page. When multiplied by even a small incremental latency this add up to significant page load times. The added file system layers added large delays in how quickly the CPU could get this information, even if the GlusterFS resided on fast media (nvme). Returning the core WordPress hierarchy back to fast native storage was essential. The sections below discuss our solution to this problem.

October 2025 Update: The original deployment used three GFS volumes. These were for certificates (web2), the core WordPress files (wp-core), and the user upload area (wp-user). See below for descriptions. Even under light loads (less than 10 concurrent sessions) we occasionally encountered hanging of one or more of the cluster servers due to GFS problems. This was despite our attempts to tune the file system for higher performance and to take advantage of the extra memory on our systems. Note that GFS is a FUSE (File System in Userspace) implementation. FUSE operations are significantly more expensive in terms of OS resources and take more time to percolate in and out of the kernel than standard file system drives (ext4 for instance). For our lightly loaded servers we did not expect to notice much if any degradation in performance.

In our case it is unclear if we were encountering configuration induced problems and/or if Gluster by its very nature was not really cut out for the job or not. My assumption is configuration issues but some research documentation into FUSE based systems do suggest performance hits may render it questionable for production use. With that said though, 2 of the 3 GFS volumes really did not need to be configured that way. Certificates and core WordPress files very rarely change, so regular rsync between the systems with file residing on an ext4 volume is actually much faster. The current (at the time of this writing) configuration is only one GFS volume is being used (wp-user) with all other either converted to ext4 or removed completely.

Cluster Namespace and Volumes

We currently have four GlusterFS volumes in use. Several of these are for historical reasons and should go away during the next round of system cleanup and reorganization. These volumes reside on fast high capacity HDD storage. This allows for these datasets to easily grow over time. As loading on the storage system is intermittent (not constant) the solution has worked out well so far. File systems share identical mount points across all nodes in the cluster creating a uniform file namespace. The volumes are:

- web1: mounted as /web1 – this was the initial volume setup for testing. The volume was taken out of service mid-September 2025.

- web2: mounted as /web2 – CERTBOT certificate storage. The only directory on web2 is /letsencrypt. On the primary origin server (test configuration) the directory /etc/letsencrypt/live/pinoytalk.com is a symbolic link to /web2/letsencrypt/live/pinoytalk.com where the actual files reside. On the load balancers /etc/letsencrypt is a symbolic link to /web2/letsencrypt. This volume was taken out of service October 2025 with www4 being the master volume and regular rsync to all other cluster nodes.

- wp-core: mounted as /wp-core – Shadow file system (see below) for live website data on the master origin server. NGINX reverse proxy servers pull static files such as images, PDF, CSS, JS and other directly from this volume. This volume was taken out of service October 2025 with www4 being the master volume and regular rsync to all other cluster nodes.

- wp-user: mount as /wp-user – this holds WordPress site user data. For a stock WordPress installation this is the /wp-content/uploads/ directory. For the pinoytalk.com test origin servers, /var/www/pinoytalk.www/wp-content/uploads is a symbolic link to /wp-user/pinoytalk/www/uploads. Reverse proxy NGINX systems read directly from this directory making user upload data immediately visible.

Shadow Filesystem

As noted above, running origin web servers from GlusterFS volumes proves impractical due to significant performance degradation. To address this, we built a fast master origin server. WordPress sites use the fastest available media (NVMe storage) using a native file system. This approach resolved the origin server’s performance issues. New challenges now became evident from the high volume of concurrent requests on complex pages. These requests overwhelmed the system, causing HTTP errors that prevented many pages from rendering properly. To fix this, we created a shadow file system to mirror the live origin server’s data residing on a GlusterFS volume. Regular automated rsync runs (every three minutes) keep the data reasonably consistent. This data consists mainly of program files, CSS, and JavaScript files (static content), which typically only change during system updates. Infrequent file changes during the rsync runs results in an effective solution.

As noted above, running origin web servers from GlusterFS volumes proves impractical due to significant performance degradation. To address this, we built a fast master origin server. WordPress sites use the fastest available media (NVMe storage) using a native file system. This approach resolved the origin server’s performance issues. New challenges now became evident from the high volume of concurrent requests on complex pages. These requests overwhelmed the system, causing HTTP errors that prevented many pages from rendering properly. To fix this, we created a shadow file system to mirror the live origin server’s data residing on a GlusterFS volume. Regular automated rsync runs (every three minutes) keep the data reasonably consistent. This data consists mainly of program files, CSS, and JavaScript files (static content), which typically only change during system updates. Infrequent file changes during the rsync runs results in an effective solution.

The wp-core volume stores the shadow filesystem. NGINX load balancers trap all requests for known static file types and instead of reverse proxying the requests to the origin server, instead directly serve the content. Performance greatly increased as the burst traffic problem with Apache went away. The origin server primarily handles PHP (compute engine) type requests, significantly reducing its load. Since static file requests occur less frequently than PHP program requests, the file system overhead issues remain minimal. In addition, NGINX caching increases performance of servicing these static resources (something NGINX is very good at) directly from it’s fast cache.

Web Site Rsync

The performance hit from GlusterFS (Apache file read access) necessitates a shadow or split filesystem, as described above. Due to the need for parallel file systems, we need a method for the regular synchronization of data between the master files (Apache HTDOCS area) and the shadow file system (GlusterFS). The wp-core volume stores the shadow files and a cron job (/etc/cronwrap/sync-sites) performs the selective synchronization of files. The master origin server synchronizes data from /var/www to wp-core shadow. Subserver machines (non-master origin servers) synchronize data in the opposite direction, from wp-core shadow to /var/www. The origin master server’s cronjob orchestrates the process, concluding with an SSH call to each subserver to execute the reverse rsync.

We use a simple hierarchy of scripts in place of a single complicated program. These simple scripts are very easy to understand and can be modified, expanded, and enhanced easily as additional sites come and go.

Putting The Pieces Together

This section is incomplete (understatement) but will be filled in over time. Need to decide how much of this to keep at a high level and how much actual configuration, test, and other information to incorporate.

Going from a simple singe server configuration to a full blown distributed architecture the first time around in one step would be cause to check my sanity. Instead the migration was done in steps, each building upon the ones that came before. Milestones included:

- Single Apache Server – HTTP/2

- Single Apache Server – HTTP/3

- Simple Split – 1 Origin, 1 Reverse Proxy

- Galera Conversion

- GlusterFS Integration

- Multiple Origin Servers

- Multiple Load Balancers

- WordPress Update Procedures

- Templating – How to Quickly Roll Out or Convert New Sites

- New System Administration Tools / Assistants

Where Do We Go From Here?

This is an on-going project. At the time of this writing all the main components have been tested with a domain we are currently not using (other than test). All the pieces have been put together and configured by hand, with our internal documentation still a bit incomplete. The first step is a full review of all the components and come up with an initial plan for automating new site rollout or conversion. Will most likely perform a few more test domain conversions by hand to verify our documentation and to work out a templating or automation strategy.

We will migrate our remaining sites, including this one, after completing and documenting the above activities.

Reflections

This project involved a few more new components to learn than most, but none were particularly difficult to get up to speed on. Learning curves were reasonable. Many of these components interact more extensively than in previous projects, but their mature technologies, used in production for several years or longer, offset this complexity. Official documentation for the most part was excellent.

So what made this project a challenge? I had trouble getting documentation that covers the interaction, testing, and verification of the components working together in a distributed environment. As these are not bleeding edge technologies anymore, perhaps my research going in was incomplete. Documentation on how to implement similar solutions under AWS and other cloud umbrellas exist and is easy to find. As we’re not using cloud services such as AWS and others, but rather building on our own servers, a different solution is called for. Many web services companies implement something similar internally so non of this should be a mystery at this point. I need to revisit this as insights into other implementations will likely be enlightening and potentially show limitations of our current architecture.

Artificial Intellligence (AI) Tools

I’ve been using AI chatbots in a limited way to assist with my research. I know that some feel AI is ready for coding and similar however I am not one of them. AI generated games and simulations of balls bouncing around a rotating container are fine. A LOT more than this is needed to justify using the current technologies for mission critical applications. And I suspect this will be the case for quite some time.

I’ve been using AI chatbots in a limited way to assist with my research. I know that some feel AI is ready for coding and similar however I am not one of them. AI generated games and simulations of balls bouncing around a rotating container are fine. A LOT more than this is needed to justify using the current technologies for mission critical applications. And I suspect this will be the case for quite some time.

With that said tools such as Perplexity and Grok have been very useful, in a limited way. Other tests I’ve done with ChatGPT and Gemini have similar results. Doing basic research on the COMPONENTS alone was extremely helpful and significantly reduced initial learning curves. Basic component compatibility and interaction queries often produced good results. The more sophisticated the query, combined with more component interactions, resulted in progressively more error prone responses. The use of AI tools significantly helped in the research and initial learning curve phases for the components. The use of AI for specific configuration and more complex queries however was not reliable. Perhaps my rudimentary knowledge of Prompt Engineering plays a part in this, but is not the entire story. I’ll continue to regularly use AI tools for basic stuff outlined here, but not lean on it (other than for making suggestions) for anything critical.

References

Coming soon